CREATING RESILIENT DATA: CONSTRUCTING IT SYSTEMS WITH SELF-HEALING CAPABILITIES

HOW TO BUILD DATA RESILIENCE

In today’s data-driven age, data has become a strategic asset for organisations.

Businesses are increasingly recognising the value and importance of leveraging data to drive informed business decisions, gain competitive advantages, and unlock new opportunities. Thus, maintaining the quality and reliability of data has become paramount for organisations. Data anomalies, errors and inconsistencies can have ramifications that lead to inaccurate insights, poor decision-making, and compromised business outcomes. These consequences affect a wide range of professionals such as data engineers who bear the overarching responsibility of data pipelines, data analysts and scientists whose models and reports depend on the accurate data and business stakeholders who rely on trustworthy data insights to make decisions. In response to these challenges, self-healing data pipelines can help.

EXPLORE THE DATA

It’s all in the data

The team at RUBIX, in close collaboration with RUBIX’s Chief Data Officer, built a pilot self-healing data pipelines model using Python.

The model uses algorithms to automatically detect and remediate data anomalies, errors and inconsistencies. Thereby reducing manual intervention, enhancing data quality, and improving the overall efficiency of data processing.

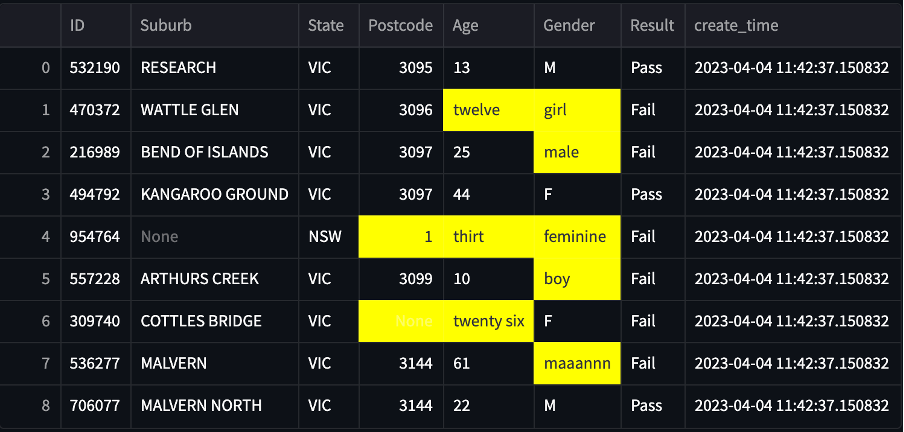

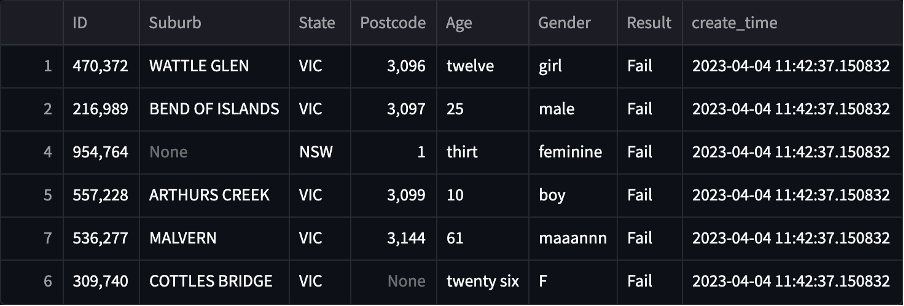

Dummy customer data was used as input data. The model variables in focus were Age, Gender and Postcode. Age and Gender are independent variables while Postcode is related to the Suburb and State variables. For example, the Postcode, 3144 corresponds to the Suburb, Malvern North and the State, VIC. Therefore, remediation of Postcode is dependent on Suburb and State variables being populated.

It is important to note that organisations have the freedom to define the data fields relevant to their domain or industry. Building a self-healing data pipeline involves consideration of the use case, the patterns and the expected results that need to be addressed and are meaningful to the organisation. The flexibility of the variables within the pipeline allows for adaptability and scalability as they can accommodate any type of data and any volume of fields.

Input dummy data with erroneous values (highlighted):

THE DEVELOPMENT PROCESS

leveraging OpenAI’s GPT API’s and utilising machine learning techniques

During the development process, various methods were explored to achieve optimal anomaly detection and pattern understanding. Two prominent approaches considered were leveraging OpenAI’s GPT APIs and utilising machine learning techniques. These two approaches were utilised in two different models to test the efficiency of each.

GPT-3 being a large language model, is primarily focused on language-related tasks. While it was successful in remediating errors in the Age and Gender columns, its strengths lie in its ability to process and generate natural language text based on context, hence its capabilities for structured or numerical pattern matching are limited. The GPT-3 model was not able to remediate the Postcode errors without help from a postcode, suburb, state mapping database. In conclusion, GPT-3 may not be the best choice in terms of building a self-healing data pipeline that caters to a variety of data types.

The GPT-4 API was considered as an alternate solution, however OpenAI is currently only offering prioritised API access to a select group of developers involved in its model evaluation. We eagerly anticipate the opportunity to experiment with the GPT-4 API when it is available and to test the effectiveness of it when applied to self-healing data pipelines.

The second model comprised of automation and supervised learning algorithms. This model performed well with remediating error values across the three columns Age, Gender and Postcode. When it comes to pattern matching and remediation, machine learning techniques are proven powerful in various domains.

- The supervised learning technique used is a Support Vector Machines classifier, it was trained on labelled examples for the Gender column to recognise discrepancies.

- Age remediation involved a Python library that enabled the translation of valid words into their corresponding integers.

- Postcode remediation involved a call to the postcodeapi.com.au API to look up Australian postcodes and their affiliated suburbs and states.

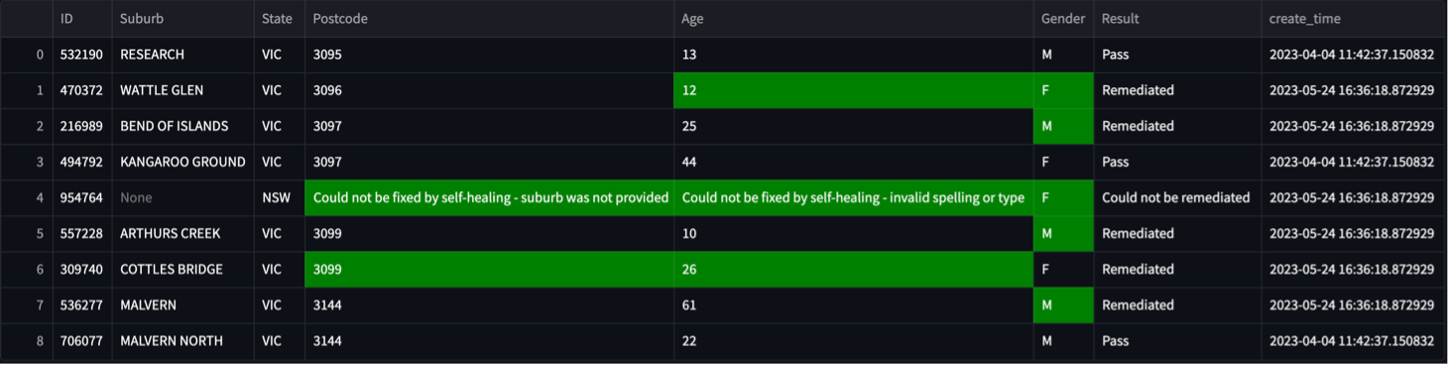

Remediated data (highlighted):

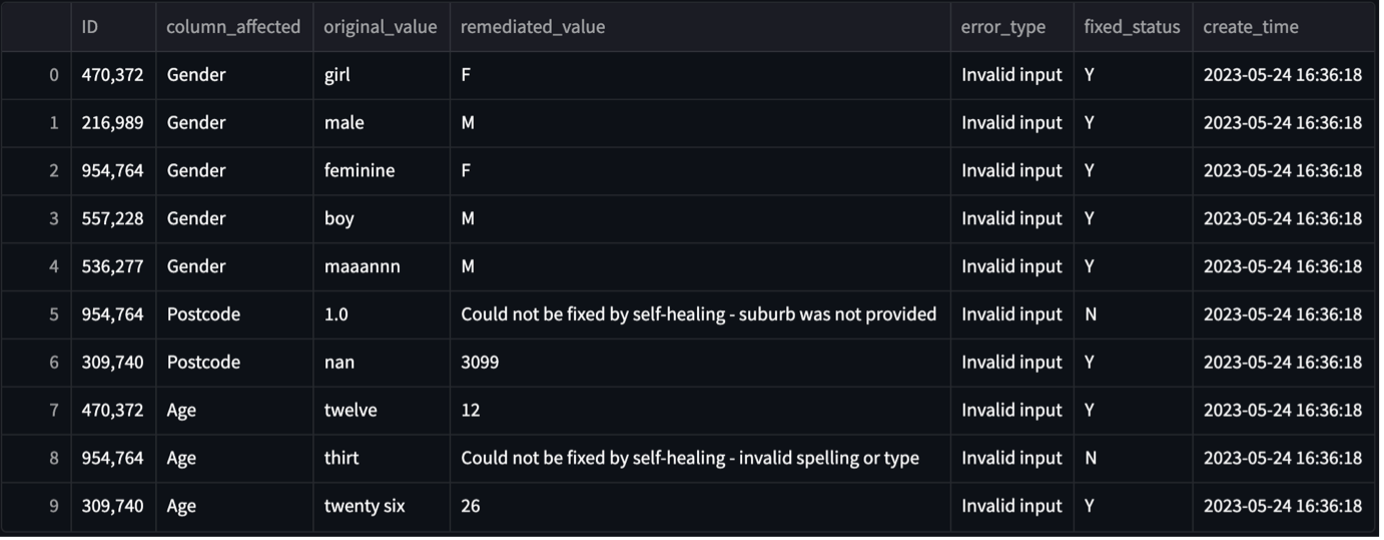

Having an audit log of historical records and a metadata log is crucial when implementing a self-healing data pipeline. The audit log ensures that the integrity of the source records remain intact and unaffected. These logs serve important purposes such as identifying patterns of errors or recurring issues, assessing the effectiveness of the self-healing data pipeline, and storing the historical erroneous data to ensure transparency and traceability throughout the data remediation process.

Audit log of historical records:

Metadata log:

Boosting Productivity and Efficiency through Automation and Machine Learning

In summary, the model leveraging automation and machine learning techniques performed best in detecting anomalies and remediating errors for this use case.

Our automation and machine learning model excels in detecting anomalies and enhancing data quality, empowering organisations with self-healing data pipelines for enhanced productivity and trustworthy data infrastructures.

Self-healing data pipelines ensure that data quality is consistently monitored and improved, reduce manual labour and empower individuals across various roles to achieve higher productivity and efficiency in their day-to-day operations. As organisations increasingly rely on data-driven decision-making, self-healing data pipelines emerge as a vital component in building robust and trustworthy data infrastructures.

Want to empower your business through data? Talk to us today